Defending the privacy of child sexual abuse victims online, in the EU and worldwide

The Chair of WePROTECT Global Alliance’s Board, Ernie Allen, reflects on the recent and ongoing challenge of EU legislation restricting the use of online tools to detect and remove child sexual exploitation and abuse (CSEA) online. He explains how the Alliance has responded, providing a committed resource and mobilizing a collaborative effort across the membership to stand up for the rights and privacy of children and CSEA survivors and the tools needed to effectively tackle the problem.

A debate has been raging in Europe over what takes precedence: privacy or child protection. In September 2020 we were called by a member of the European Parliament who asked for help. The European Home Affairs Commissioner, Ylva Johansson of Sweden, had proposed a “temporary derogation,” an interim measure allowing the continuing use of technology tools to identify, report and remove child sexual exploitation and abuse (CSEA) from the internet on a voluntary basis while the EU negotiated a permanent solution. The Commission proposed a five-year window.

Child Sexual Exploitation and Abuse Online, through attempts to groom and coerce children online for the purpose of sexual abuse and through the production and distribution of Child Sexual Abuse Material (CSAM), is a major human rights issue globally.

In 2019, the New York Times wrote:

Twenty years ago, online images were a problem; 10 years ago, an epidemic. Now, the crisis is at a breaking point.

These images record and memorialize the rape and sexual assault of children (many younger than 12 and often as young as a few months). Each image is a crime-scene photo and every time it is shared or downloaded a crime is committed and a child is re-victimized.

Automated tools to fight the tsunami of CSAM online

In 2009 Microsoft launched photoDNA, a robust hashing technology to reliably identify and remove CSAM from online services. Robust image hashing algorithms extract a distinct digital signature from harmful or illegal content and then compare the signatures against content at the point of upload. Flagged content can be instantaneously removed and reported.

How PhotoDNA technology works

PhotoDNA enables the creation of a unique digital signature of an image.

Once created, a signature can be compared to other images’ signatures to find matches.

This technique is known in the tech industry as “hashing”.

There has been widespread global adoption of photoDNA with remarkable success, with other tools using similar technology and approaches being developed too. We now also have related technologies which can identify efforts to groom children for sexual purposes or live stream the abuse of children. These tools are crucial to our ability to disrupt and prevent the sexual abuse of children online, allowing law enforcement and child protection agencies to be proactive in protecting children, reducing harm, and stopping the creation of CSAM before it can be published and widely distributed online.

From identifying and reporting these images, strong child victim identification programs have been established. Child victims are being located and rescued. Perpetrators are being brought to justice. In 2020 with the advent of COVID-19, this crisis worsened. As a result, children are spending more time online than ever before and so are the people who seek to sexually groom, exploit and abuse them. We argued this was the worst possible time for Europe to eliminate the use of these vital technologies.

The effect of coordinated political pressure

The European Commission’s proposal had to be resolved by 21 December 2020, the date on which the new European Electronic Communication Code came into effect. Yet, the proposal for a “temporary derogation” was opposed by a coalition within the Parliament, arguing it violated the privacy rights of users. Working closely with our members in Europe and further afield, WePROTECT Global Alliance began an effort to make Members of the Parliament aware of the potential harms to children if they didn’t act. We participated in video calls with key leaders, mobilized our civil society members, contacted UN leaders, spoke to various bodies, and much more. We also engaged with privacy advocates, arguing that privacy and child protection are compatible and not mutually exclusive.

Throughout the process we emphasized that WePROTECT Global Alliance is fervently pro-privacy, but we also asked a key question: “whose privacy?”. We argued that privacy rights are not absolute. There must also be concern about the privacy of the child victims, whose images remain on the internet unless we utilize these common-sense tools. We argued for compromise and committed to be a part of the dialogue going forward in a quest for a permanent solution.

The Parliament’s initial plan was to delay action until at least February 2021. Thus, the use of these tools, which last year alone generated 69 million reports of CSAM images and videos, would end in Europe on 21 December 2020. We and our members generated wide-spread media coverage, raised awareness, and on 14 December the Parliament acted. It approved a proposal for a compromise allowing the continuing use of CSAM and anti-Grooming tools for two years instead of five.

On 7 December the Parliament’s LIBE Committee approved the compromise. And on 14 December the full Parliament approved the compromise. But that compromise was still subject to a Trilogue on 17 December, a three-member panel including representatives of the European Parliament, European Commission and European Council. Unfortunately, the December Trilogue did not reach agreement.

Without agreement, we are in the dark

It was widely reported that the Trilogue was willing to continue the technology tools only for previously identified content but not for new, never-seen-before CSAM or for child grooming. Thus, the Parliament’s compromise did not take effect.

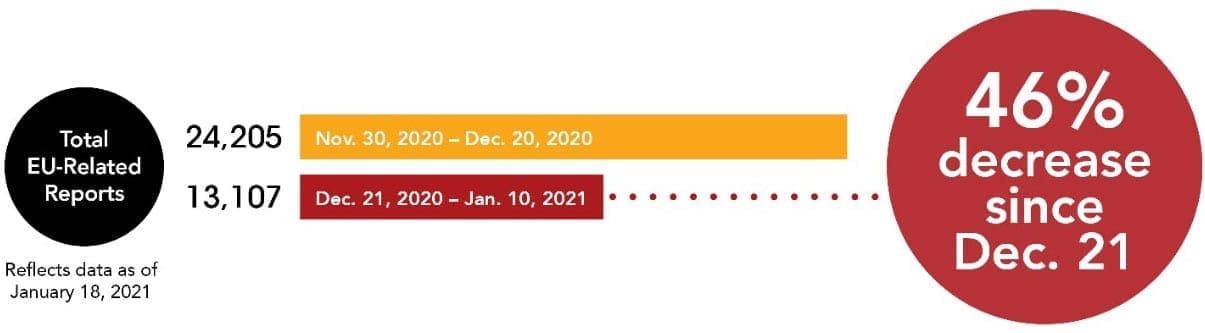

As we had feared, Facebook and other companies discontinued the use of these tools to identify, report and remove CSAM content in Europe. One media outlet estimated that as a result at least 250,000 images and videos of children being raped and sexually assaulted would not be identified or reported in Europe. The National Center for Missing and Exploited Children recently revealed that in the three weeks following the e-Privacy Directive coming into force reports to their CyberTipline concerning child sexual exploitation in the EU plummeted by 46%.

Five companies (Google, LinkedIn, Microsoft, Roblox and Yubo) made a welcome announcement that they would continue to utilize these tools, hoping for a swift resolution. But the need for a final solution remained, and we continued to work to mobilize support.

In order to reach an interim solution, the technical Trilogues recommenced in January and were scheduled to lead to a political meeting on 26 January. However, this was postponed with little clarity over when it will now take place or what the obstacles to a workable solution are for those negotiating. But what is clear is that every day this political deadlock continues, children are being put at increased, unnecessary and unacceptable risk, and opportunities to disrupt and prevent abuse online and safeguard children are being missed.

The debate and the quest for a permanent solution continues, and WePROTECT Global Alliance continues its efforts. Nonetheless, even though this debate has not yet been resolved, this four-month long process demonstrates vividly the kind of key role the Alliance can play, now and in the future. In these challenging policy debates we will continue to be a strong, loud voice for children and work to ensure that policymakers worldwide are fully aware of the true impacts of their actions or inaction.

The views and opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of WeProtect Global Alliance or any of its members.